When AI Becomes a Crutch in UX Design

Thoughts on the over-reliance on AI tools as a replacement for UX processes

Generative AI (genAI) has the potential to revolutionize the way we create and design. By unlocking our creativity, genAI can help us explore new possibilities, discover new insights, and scale our ideas in ways that were never before possible. We can unlock creativity with genAI for further exploration, discovery and scale — but how accurate are models?

I feel that this is the wrong question to ask. Instead, we should focus on how we can co-evolve with genAI tools in order to scale and systematize our product creation processes.

In this article, I talk about:

How scale amplifies ‘inaccurate’ content

How product creators can co-evolve with genAI

Bias in models can hinder AI-powered product inclusion & equity

What steps we can take to mitigate inaccurate content

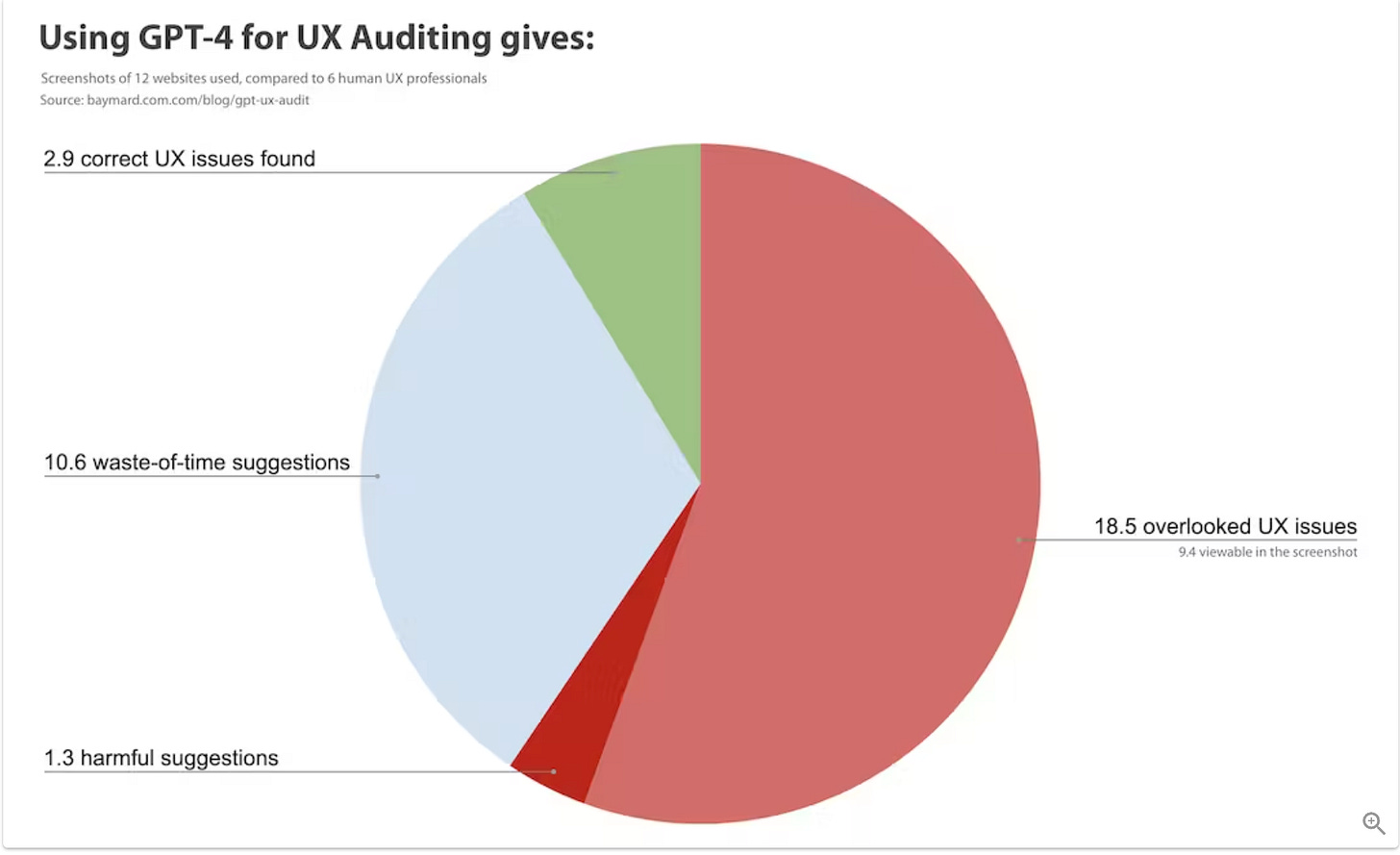

I was thinking about this topic this week because of a recent article by Baymard Institute which found that ChatGPT had a 80% error rate and 14-26% discoverability rate, meaning the tool was missing the mark in providing UX guidance. Some of the feedback from the tool was vague, repetitive and inaccurate. This is not surprising, given the reasons I outline below regarding scale, co-evolution and inherent model biases.

Scale amplifies ‘inaccurate’ content

As AI scales, it becomes more difficult to ensure the accuracy of the content it produces. This is because AI models are trained on data, and if that data is inaccurate or biased, the model will learn to produce inaccurate or biased content.

But why is this?

Larger datasets can contain more errors and biases. When training an AI model, it is important to use a dataset that is clean and representative of the real world. However, as datasets get larger, it becomes more difficult to ensure that they are free of errors and biases.

AI models can become more complex as they are scaled. As AI models are scaled to handle larger and more complex datasets, they can become more complex themselves. This can make it more difficult to understand how the model works and to identify and correct errors.

It can be more difficult to monitor and evaluate AI models as they scale. As AI models are deployed to more and more users, it can become more difficult to monitor their performance and evaluate their accuracy. This can lead to inaccurate content being produced and disseminated without being detected.

How product creators can co-evolve with GenAI

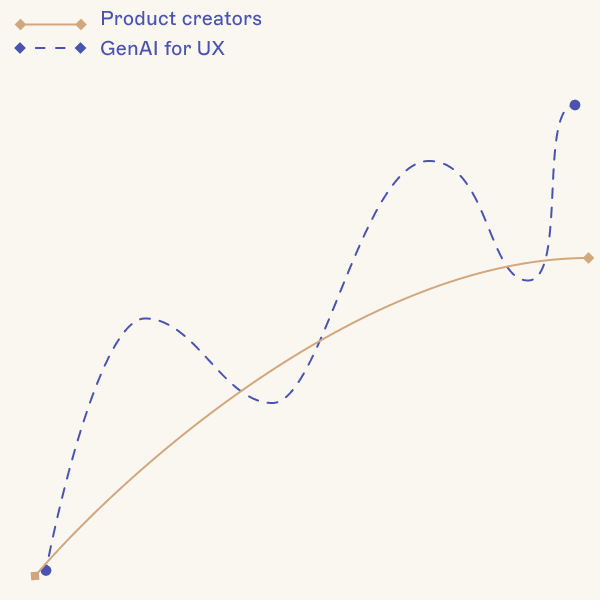

Product creators can use generative AI tools to augment their processes by co-evolving with them. By pairing the capabilities of conversational large language models (LLMs) with the knowledge of product managers, UX designers, and UX writers, we can create an impressive automation solution for product creation.

Bias in models can hinder AI-powered product inclusion & equity

I recently wrote about the concept of AI-powered product inclusion and equity design as a method of designing systematically for all users. Bias exists across the product creation lifecycle, leading to products that are not designed for or meet the needs of certain groups.

If the dataset is biased, the AI will learn those biases and reflect them in its output. For example, if the dataset contains mostly images of white people, Midjourney is more likely to generate images of white people.

Examples of biased data:

Racial bias

Gender bias

Age bias

Occupational bias

What steps can we take to mitigate inaccurate content

Utilize AI tools for ‘coaching’ rather than automation. Product creators are the experts and we must take on the role of auditing the output from these tools.

Develop a robust testing method to provide feedback to the models . AI models should be regularly tested and evaluated to ensure that they are performing as expected and that they are not producing inaccurate content.

Implementing monitoring and feedback mechanisms. AI systems should be monitored to detect and correct errors as quickly as possible. Feedback mechanisms should also be implemented to allow users to report inaccurate content.

Expand a trusted tester program. Include traditionally marginalized groups in your trusted tested program. They will have a unique perspective to help you train the models